Introduction to Modern Computing Architectures

Modern computing architectures represent the foundation of today’s technological advancements, powering everything from personal devices to global cloud infrastructures. Unlike traditional computing systems, modern architectures are designed to handle high-performance workloads, artificial intelligence, big data analytics, and real-time applications, often across distributed and hybrid environments. These architectures are the evolution of computing principles, blending CPU-based processing, GPU acceleration, parallelism, and emerging technologies like quantum and neuromorphic computing to meet the growing demands of modern users.http://futuretechfrontier.com

Historically, computing relied heavily on the Von Neumann architecture, which centralized processing and memory. While this architecture laid the groundwork for modern computing, it faces limitations in handling the complexity and scale of today’s applications. Modern computing architectures have evolved to overcome these bottlenecks, offering faster computation, lower latency, higher efficiency, and adaptability to diverse workloads.

The significance of modern computing architectures spans multiple domains:

- Scientific Research: Large-scale simulations in physics, climate modeling, and genomics rely on parallel and distributed architectures for computational efficiency.

- Artificial Intelligence and Machine Learning: GPUs, TPUs, and specialized AI accelerators are optimized to process complex neural network computations.

- Enterprise and Cloud Applications: Data centers employ cloud architectures with virtualization, containerization, and hybrid systems for scalable service delivery.

- Edge and IoT Applications: Decentralized edge computing architectures bring real-time processing close to data sources, enabling smart cities, autonomous vehicles, and industrial IoT.

Fact: According to MarketsandMarkets, the global high-performance computing (HPC) market is projected to reach $52.5 billion by 2027, driven by innovations in modern computing architectures and AI workloads.

In this article, we will explore the types of modern computing architectures, the key technologies behind them, real-world applications, benefits, challenges, and future trends, providing a complete understanding of how these architectures are shaping the future of computing.

Key Types of Modern Computing Architectures

The landscape of modern computing architectures is diverse, reflecting the variety of applications and workloads in today’s technology-driven world. From traditional CPU-based systems to advanced AI accelerators and quantum processors, each architecture offers unique advantages, design principles, and use cases. Understanding these types is essential for researchers, engineers, and businesses looking to optimize performance and efficiency.

Von Neumann Architecture (Traditional Basis)

The Von Neumann architecture is the foundation of most classical computing systems. It consists of a central processing unit (CPU), memory, and input/output systems, with a shared data bus connecting these components. While this architecture is simple and effective for general-purpose computing, it has notable limitations:

- Bottleneck in memory access: CPUs must wait for data to move between memory and the processor.

- Limited parallelism: Processing is primarily sequential, restricting performance for complex computations.

- Inefficient for modern AI workloads: High-volume data processing for machine learning or big data is slow.

Despite its limitations, the Von Neumann architecture remains relevant as a baseline model, forming the basis for understanding and evolving modern computing systems.

Parallel and Distributed Architectures

Modern workloads often require massive computational power, which single-core or even multi-core CPUs cannot efficiently handle. Parallel computing splits tasks across multiple cores or processors, while distributed computing spreads workloads across multiple machines connected over a network.

Key features:

- Multi-core processors: Execute multiple tasks simultaneously on the same chip.

- Clustered and grid systems: Multiple computers work together to solve large problems.

- Cloud-based distributed architectures: Virtualized resources allow elastic scaling for enterprise applications.

Use cases: Weather forecasting, large-scale scientific simulations, and big data analytics rely heavily on these architectures to reduce computation time and handle complex datasets.

Multi-core and Many-core Architectures

Multi-core processors integrate two or more cores into a single CPU, allowing multiple processes to execute concurrently. Many-core processors extend this concept to dozens or even hundreds of cores, offering high throughput and efficiency for specialized tasks.

Benefits:

- Faster computation for multi-threaded applications

- Improved energy efficiency compared to multiple single-core processors

- Essential for modern AI, HPC, and graphics-intensive applications

Example: AMD’s EPYC and Ryzen Threadripper processors use multi-core designs to provide superior performance for server workloads, gaming, and professional content creation.

GPU and Accelerated Computing Architectures

Graphics Processing Units (GPUs) have become central to modern computing architectures due to their parallel processing capabilities. Unlike CPUs, which are optimized for sequential tasks, GPUs excel at handling massively parallel computations, making them ideal for AI, machine learning, and scientific simulations.

Specialized accelerators:

- TPUs (Tensor Processing Units): Designed for deep learning workloads

- NPUs (Neural Processing Units): Optimized for AI inference in mobile and edge devices

Impact: GPUs and accelerators significantly reduce training time for deep neural networks, enabling breakthroughs in AI applications such as natural language processing, computer vision, and autonomous vehicles.

Cloud and Edge Computing Architectures

Cloud and edge computing represent decentralized and hybrid architectures that address modern data processing challenges.

Cloud computing architecture:

- Centralized data centers with virtualization and containerization

- Offers scalable, on-demand resources for enterprise applications

- Reduces infrastructure costs and enables global accessibility

Edge computing architecture:

- Processes data closer to the source (IoT devices, sensors, and gateways)

- Reduces latency and bandwidth requirements for real-time applications

- Supports autonomous vehicles, smart cities, and industrial automation

Hybrid architectures combine cloud and edge computing to balance scalability, performance, and real-time processing needs.

Quantum Computing Architectures

Quantum computing represents a revolutionary approach to computation, leveraging qubits, superposition, and entanglement to solve problems that are infeasible for classical computers.

Key types:

- Gate-based quantum computers: Universal systems capable of running quantum algorithms

- Quantum annealers: Optimized for solving complex optimization problems

Applications: Cryptography, drug discovery, optimization, and simulation of quantum systems.

Challenge: Quantum architectures are still emerging, with high costs, error rates, and technical complexities limiting widespread adoption.

Neuromorphic and Bio-inspired Architectures

Neuromorphic computing mimics the structure and function of the human brain, using spiking neurons and event-driven computation. These architectures are designed to process information efficiently with low energy consumption, making them ideal for AI and sensory applications.

Advantages:

- Low power consumption compared to traditional architectures

- Highly parallel, event-driven processing

- Ideal for robotics, AI edge devices, and real-time pattern recognition

Example: Intel’s Loihi neuromorphic chip demonstrates the potential of brain-inspired architectures for energy-efficient AI applications.

Key Components and Technologies Behind Modern Computing Architectures

Understanding modern computing architectures requires more than knowing the types; it’s essential to dive into the key components and technologies that make these systems efficient, scalable, and capable of handling complex workloads. From processors to interconnects, memory hierarchies, and AI integration, these components form the backbone of next-generation computing systems.

Processors and Cores

At the heart of every computing architecture lies the processor, which executes instructions and manages data flow. Modern architectures use multi-core, many-core, and specialized processors to optimize performance across diverse workloads.

Key components:

- CPU (Central Processing Unit): Handles general-purpose computing tasks

- GPU (Graphics Processing Unit): Excels at parallel processing for AI, simulations, and graphics

- TPU (Tensor Processing Unit) & NPU (Neural Processing Unit): Specialized accelerators for machine learning and AI inference

- Heterogeneous computing: Combining CPUs, GPUs, and accelerators in a single system to optimize performance and energy efficiency

Fact: High-performance computing systems, such as the Summit supercomputer, combine CPUs and GPUs in a heterogeneous architecture to deliver over 200 petaflops of computational power.

Memory Hierarchies and Storage Systems

Memory architecture plays a critical role in computing performance. Modern systems implement multi-level memory hierarchies to balance speed, capacity, and cost.

Components and types:

- Cache memory: Ultra-fast memory located near the processor for frequently accessed data

- RAM (Random Access Memory): Temporary storage for active processes

- Non-volatile memory (NVM) & Storage-class memory: Persistent storage with low latency for large datasets

- Distributed memory systems: Used in cluster and cloud architectures for scalable computation

Impact: Proper memory hierarchy design reduces latency and improves throughput, which is essential for AI, HPC, and real-time applications.

Interconnects and Network Fabric

Modern computing architectures rely on high-speed interconnects to facilitate fast communication between processors, memory, and storage systems.

Key technologies:

- PCIe (Peripheral Component Interconnect Express): Connects CPUs, GPUs, and accelerators within a single system

- NVLink and Infinity Fabric: High-bandwidth connections for GPU clusters and HPC

- InfiniBand and Ethernet: Network fabrics for distributed and cloud computing systems

Fact: Supercomputers like Fugaku in Japan use high-speed interconnects to achieve low latency and high bandwidth, enabling efficient parallel processing for scientific simulations.

Virtualization and Containerization

Virtualization allows multiple operating systems to run on a single physical machine, maximizing hardware utilization. Containers provide lightweight, isolated environments for applications, making it easier to deploy, scale, and manage workloads.

Benefits:

- Efficient use of server resources in cloud and enterprise environments

- Simplified deployment of AI and big data applications

- Seamless migration between physical and virtual infrastructures

Example: Kubernetes, combined with containerized applications, allows cloud providers to orchestrate thousands of workloads efficiently, reducing downtime and improving resource management.

AI and ML Integration

Modern computing architectures increasingly integrate AI-specific capabilities to handle machine learning workloads efficiently. AI accelerators, optimized memory hierarchies, and specialized interconnects allow systems to process massive datasets and complex algorithms.

Applications:

- Real-time inference in edge devices and autonomous systems

- Large-scale AI model training in cloud and HPC environments

- Predictive analytics and decision-making in enterprise systems

Example: Google’s TPU v4 is designed to accelerate deep learning workloads, enabling faster training of AI models like BERT and GPT architectures for natural language processing.

Applications of Modern Computing Architectures

Modern computing architectures are not just theoretical—they have transformed how industries, researchers, and everyday users leverage technology. From high-performance computing to AI, cloud services, and IoT, these architectures power a vast range of applications that require speed, scalability, and real-time processing. Understanding these applications highlights why modern architectures are critical for innovation and productivity.

Scientific Computing and Research

Scientific computing relies heavily on parallel, multi-core, and distributed architectures to simulate complex phenomena. High-performance computing (HPC) systems perform computations that would be impossible on standard machines.

Applications include:

- Climate modeling and weather prediction: Simulating global atmospheric patterns in real-time

- Genomics and bioinformatics: Processing vast DNA datasets for research and healthcare

- Physics simulations: Particle accelerators, quantum simulations, and astrophysics modeling

Fact: The Fugaku supercomputer in Japan, based on modern multi-core and high-speed interconnect architectures, achieved over 442 petaflops, accelerating climate simulations and drug discovery research.

Artificial Intelligence and Deep Learning

AI workloads require massive parallelism and specialized accelerators like GPUs and TPUs. Modern computing architectures make training complex neural networks faster and more efficient.

Applications include:

- Natural language processing (NLP): Large AI models like GPT rely on distributed GPU clusters

- Computer vision: Real-time image and video analysis for surveillance, autonomous vehicles, and robotics

- Predictive analytics: AI-driven business insights from large-scale data

Case Study: Google’s TPU-based infrastructure enables the training of models with billions of parameters, drastically reducing training time compared to CPU-only systems.

Enterprise and Cloud Services

Cloud computing architectures are at the heart of modern enterprise operations. Virtualized and containerized systems provide scalability, cost efficiency, and flexibility.

Applications include:

- SaaS applications like Microsoft 365 or Salesforce

- Big data analytics and storage in cloud data centers

- Hybrid cloud solutions for global enterprises

Example: Amazon Web Services (AWS) uses modern distributed architectures with elastic resource allocation to serve millions of customers worldwide efficiently.

Edge and IoT Applications

Edge computing architectures allow data processing closer to the source, reducing latency and bandwidth use. These architectures are essential for real-time IoT and industrial applications.

Applications include:

- Autonomous vehicles: Real-time decision-making for navigation and safety

- Smart cities: Traffic management, surveillance, and environmental monitoring

- Industrial IoT: Predictive maintenance and operational efficiency in manufacturing

Fact: Gartner predicts that by 2025, 75% of enterprise-generated data will be processed at the edge, emphasizing the growing importance of edge architectures.

Emerging Applications in Quantum and Neuromorphic Systems

Quantum and neuromorphic architectures are opening doors to next-generation applications.

Quantum computing applications:

- Optimization problems in logistics and finance

- Drug discovery and molecular simulations

- Cryptography and security

Neuromorphic computing applications:

- Low-power AI for robotics and wearable devices

- Real-time sensory processing in autonomous systems

- Brain-inspired computation for adaptive learning

Impact: While still emerging, these architectures promise unprecedented computational efficiency and new problem-solving capabilities that classical systems cannot achieve.

Benefits of Modern Computing Architectures

The future of modern computing architectures is not only defined by their complexity but by the tangible benefits they provide across industries, research, and everyday computing. These architectures are designed to improve performance, efficiency, scalability, and innovation, making them indispensable in the modern technology ecosystem.

1. Enhanced Computational Speed and Efficiency

Modern architectures, including multi-core CPUs, GPUs, TPUs, and quantum systems, enable massively parallel processing, allowing tasks that would take days on traditional systems to complete in hours or minutes.

Key points:

- Multi-core and many-core processors handle multiple threads simultaneously

- GPUs and TPUs accelerate AI, ML, and graphics workloads

- Distributed and cloud architectures enable concurrent processing across thousands of nodes

Fact: The Summit supercomputer leverages a combination of CPU and GPU architectures to achieve 200+ petaflops, making it one of the fastest supercomputers in the world.

2. Scalability and Flexibility for Diverse Workloads

Modern computing architectures are designed to scale efficiently based on the needs of applications. Whether it’s a small enterprise deploying cloud services or a research center running HPC simulations, modern architectures offer flexibility in resource allocation and expansion.

Benefits include:

- Elastic scaling in cloud architectures to meet fluctuating demand

- Heterogeneous systems for optimized performance across AI, data analytics, and simulations

- Edge computing for low-latency, real-time processing without overloading central systems

Example: Netflix leverages cloud and distributed architectures to serve millions of users worldwide, dynamically adjusting computing resources based on demand.

3. AI and Machine Learning Optimization

Modern architectures are increasingly AI-ready, providing the hardware and software frameworks necessary for training and deploying machine learning models efficiently.

Advantages:

- Accelerators like TPUs and NPUs reduce AI training time

- Memory hierarchies and high-speed interconnects improve large-scale data throughput

- Real-time inference at the edge enables applications like autonomous vehicles and smart cities

Case Study: Google’s TPU clusters enable real-time language translation and image recognition, demonstrating the benefits of AI-optimized architectures in production systems.

4. Energy Efficiency and Sustainability

Energy consumption is a major concern in large-scale computing systems. Modern architectures, particularly neuromorphic and heterogeneous systems, are designed to maximize computational output while minimizing power usage.

Benefits:

- Neuromorphic chips mimic brain-like efficiency for low-power AI tasks

- Multi-core and many-core designs optimize energy usage compared to equivalent single-core systems

- Cloud data centers adopt virtualization and workload optimization to reduce energy costs

Fact: Intel’s Loihi neuromorphic chip demonstrates over 1,000x energy efficiency compared to traditional processors for certain AI workloads.

5. Support for Advanced Applications and Innovation

Modern computing architectures enable technologies that were previously impossible, from quantum simulations to autonomous robotics and next-generation AI. By providing the right combination of speed, parallelism, and scalability, these architectures unlock new possibilities across industries.

Examples:

- Quantum computing for cryptography and optimization

- Cloud HPC for genomics and climate modeling

- Edge AI for real-time industrial automation and smart cities

Challenges and Limitations of Modern Computing Architectures

While modern computing architectures offer impressive performance and capabilities, they are not without challenges. Understanding these limitations is essential for developers, engineers, and organizations to design, implement, and maintain systems efficiently while avoiding potential pitfalls.

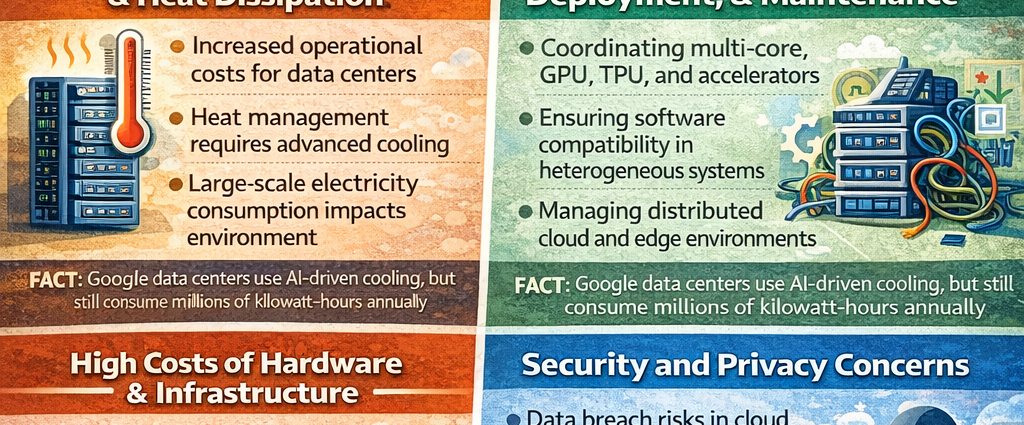

1. High Power Consumption and Heat Dissipation

Modern processors, GPUs, and accelerators consume significant energy, especially in high-performance computing (HPC) and AI workloads. High energy usage leads to:

- Increased operational costs for data centers and enterprises

- Heat generation that requires sophisticated cooling systems

- Environmental impact due to large-scale electricity consumption

Fact: The Google data center fleet uses advanced cooling and AI-driven energy optimization to manage power consumption, but running AI workloads at scale still consumes millions of kilowatt-hours annually.

2. Complexity in Design, Deployment, and Maintenance

Advanced computing architectures involve multiple components and technologies, making system design and maintenance highly complex. Challenges include:

- Coordinating multi-core, many-core, GPU, TPU, and accelerator integration

- Ensuring software compatibility with heterogeneous architectures

- Managing distributed systems across cloud and edge environments

Impact: Complex architectures require specialized skills in hardware, software, and system administration, which can limit adoption in smaller enterprises or research groups.

3. High Costs of Hardware and Infrastructure

Investing in modern computing architectures can be expensive due to:

- High-end GPUs, TPUs, and specialized accelerators

- HPC clusters and high-speed interconnects

- Cloud infrastructure subscriptions and ongoing maintenance

Example: Building a GPU-accelerated AI training cluster for deep learning can cost hundreds of thousands of dollars, making it inaccessible for smaller organizations without cloud solutions.

4. Security and Privacy Concerns

With distributed and cloud-based architectures, security and privacy become critical issues. Risks include:

- Data breaches in cloud environments

- Unauthorized access to sensitive scientific, financial, or personal data

- Vulnerabilities in AI and edge devices, which can be exploited by hackers

Best Practices: Encryption, access control, and secure virtualization frameworks are essential to mitigate risks in modern computing systems.

5. Technological and Compatibility Constraints

Modern architectures often rely on cutting-edge hardware and software, which may not be compatible with legacy systems or older software applications. Challenges include:

- Updating or rewriting applications to leverage GPU/TPU or distributed architectures

- Integration difficulties between edge, cloud, and on-premises systems

- Limited standardization in emerging fields like quantum or neuromorphic computing

Fact: Many organizations face technical debt when migrating from traditional CPU-based systems to heterogeneous or AI-optimized architectures, requiring careful planning and investment.

Comments are closed, but trackbacks and pingbacks are open.